Creating Epic Worlds and Engaging Content…

Using Epic Games' Unreal Engine to Create Content for Use in

Live Performance

by Todd F. Edwards, Omar Al-Taie, Emma Michalski, and Inna Sahakyan

The live performance industry has used digital resources for decades to aid in the creation and presentation of content in theater, dance, music, and performance art. Technologies routinely used in film and television are now finding their way into live performance. The lines between live and virtual storytelling are blurring more each day. The convergence of live performance being presented in mediated ways like video games and virtual experiences is becoming increasingly common. Additionally, the Covid-19 global pandemic of 2020 and now 2021 has made live performance more difficult, forcing artists, designers and producers to find alternative ways of telling their stories and presenting their work to audiences.

The scope of this project was to continue my ongoing research in the use of technologies commonplace in the film and television industry and explore how they can be used in live performance. Over the past several years, through the generous funding of the CURI (Collaborative Undergraduate Research and Inquiry) program at St. Olaf College, I have been awarded funding and student research positions to aid in these explorations. It has been my goal to see how these tools can aid us in the creation of content to drive the storytelling process in live performance. The use of lighting, sound, and media are commonplace in our modern production process and can be traced as far back as the use of shadow puppets and flickering firelight to create imagery on cave walls to help share our stories. Mediated content can be a static image or video to set a scene, create a backdrop or environment, or a heightened spectacle for setting the tone or creating a mood to aid in the storytelling process. When we see things like the Super Bowl Halftime Show, the opening ceremonies of the Olympic Games or spectacle-filled concerts, we are inspired to recreate that in our own individual storytelling endeavors and find new ways to expand and push the envelope with each production. It is no wonder that the use of video game technologies would become the next big asset in the theater production process. Game theory and the use of VR and gaming applications are not new in live performance. They have been around for many years now. What is new is the scope and scale that these technologies can be leveraged in the live production arena as well as the relative ease of use and the accessibility that we can enjoy. Covid-19 has forever changed the world we live in and the work we do in the live entertainment industry. Given the tragic death toll and the impact on the quality of life experienced worldwide by this global pandemic, I find it hard to acknowledge any positive contributions brought about by this tragic virus. However, the canceling of productions worldwide has forced us to explore other outlets for our creative energies and discover new and exciting ways to realize the needs of productions and how they are shared with our audiences. This has fast-tracked the use of streaming, mediated, and cinematic tools for live performance. It was this situation that brought about my project.

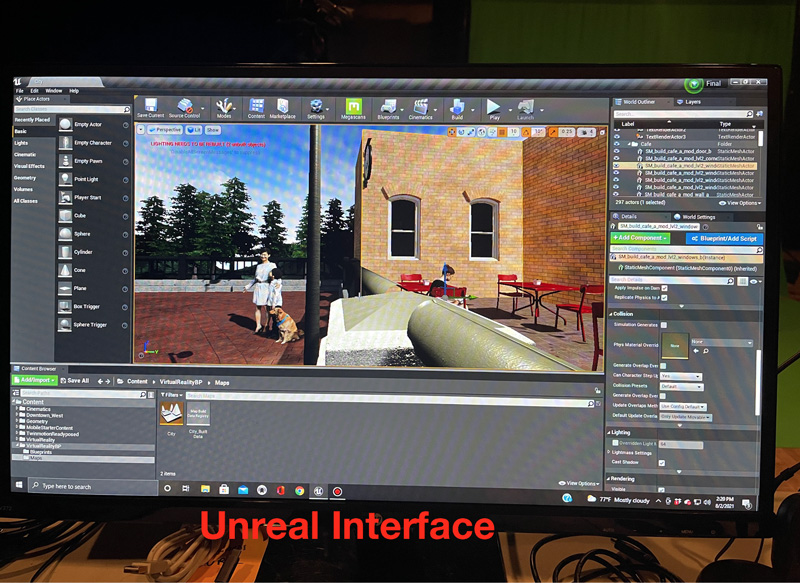

A primary application that we explored in this project was Unreal Engine. This program is not a new one. It has been used for years in the video game industry to create some of the most advanced and visually stunning games in history.  The early versions of this program relied heavily on the use of coding to create content. The program uses the C++ language allowing users to use code to create and output the program’s content. The problem with this is that many designers, artists, and creative individuals are more visually driven than analytically minded. I have wanted to learn Unreal Engine for years but always found the program too intimidating and inaccessible due to the heavy reliance on coding. The recent versions of Unreal Engine have become more graphical in nature and resemble the CAD, 3D modeling/rendering, graphics, and video applications we use routinely in the live production and entertainment industry. This, along with the adoption by HBO’s Westworld (https://www.unrealengine.com/en-US/spotlights/hbo-s-westworld-turns-to-unreal-engine-for-in-camera-visual-effects) and Disney’s The Mandalorian (https://www.unrealengine.com/en-US/blog/forging-new-paths-for-filmmakers-on-the-mandalorian) resulted in Unreal Engine becoming a mainstream creation program for visual storytelling. The media powerhouse Disguise (formally d3 Technologies) brought the use of Unreal Engine into live performance even further. (https://www.disguise.one/en/insights/news/rx-renderstream-virtual-production/). Unreal Engine has truly brought about an era of real-time content creation and virtual interactivity.

The early versions of this program relied heavily on the use of coding to create content. The program uses the C++ language allowing users to use code to create and output the program’s content. The problem with this is that many designers, artists, and creative individuals are more visually driven than analytically minded. I have wanted to learn Unreal Engine for years but always found the program too intimidating and inaccessible due to the heavy reliance on coding. The recent versions of Unreal Engine have become more graphical in nature and resemble the CAD, 3D modeling/rendering, graphics, and video applications we use routinely in the live production and entertainment industry. This, along with the adoption by HBO’s Westworld (https://www.unrealengine.com/en-US/spotlights/hbo-s-westworld-turns-to-unreal-engine-for-in-camera-visual-effects) and Disney’s The Mandalorian (https://www.unrealengine.com/en-US/blog/forging-new-paths-for-filmmakers-on-the-mandalorian) resulted in Unreal Engine becoming a mainstream creation program for visual storytelling. The media powerhouse Disguise (formally d3 Technologies) brought the use of Unreal Engine into live performance even further. (https://www.disguise.one/en/insights/news/rx-renderstream-virtual-production/). Unreal Engine has truly brought about an era of real-time content creation and virtual interactivity.

Seeing the power of this technology and how it can revolutionize mediated storytelling inspires us to want to include it in our productions. In our project, we spent 10 weeks teaching ourselves Unreal Engine and finding workflows that made sense within the scope of regional, smaller professional, community, and academic production venues. We found the learning curve to be substantial and often frustrating. We used online training provided by Epic Games, Udemy, and Linked-In-Learning to teach us the basics of the program. With patience, Unreal Engine’s basic features are no more difficult to master than other high-end CAD and 3d applications. The real frustration comes from the true power of the program. There are nearly limitless combinations of options for rendering and outputting content. The final product can be stills, video, or executable files on computers and VR headsets.

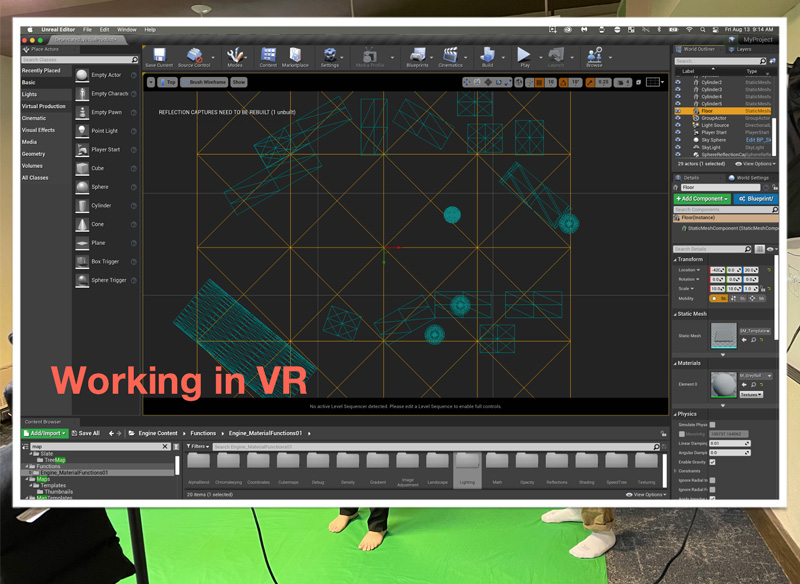

Youtube videos proved invaluable in our learning process but again the powerful options of the program forced us to view a number of tutorials on the same topic. We spent three days trying to output content to a VR headset following official training and YouTube instructions without success and then found another YouTube video that mentions “make sure that you select a specific option or the entire process will fail”. Three days of frustration ended.

If you plan on using your content in VR there are several drivers, SDKs, and plug-ins that must be installed. For those of us in academia, your institution’s IT build practices and security protocols may make it difficult or impossible to install these resources. Unreal Engine is both a Mac and PC application but many of the VR and live streaming add-ons are currently PC only. You can create and easily transfer content between Windows and Mac platforms, but Windows is the best platform for Unreal Engine (this pains me as a long-time Mac guy). The IT builds at our institution made it impossible for us to fully utilize college computers and I ended up purchasing a Windows computer to successfully complete our research. The more robust the computer, the better. Check the Unreal Engine website for specifications (https://docs.unrealengine.com/4.26/en-US/Basics/RecommendedSpecifications/). Use the drop-down selector in the upper right to switch between platforms to see their minimum requirements. Unreal Engine is free to use and only charges royalty fees for monetized content using the engine that exceeds $1,000,000.00 (https://www.unrealengine.com/en-US/faq).

Our original goal for this project was to create environments that would be interacted with by live actors. Additionally, we hoped digital actors would interact with the live performers on stage as well.  This was a very ambitious undertaking as we were learning Unreal Engine as we were creating our content. We often compared the process to “creating the car as we drive it down the interstate”. We re-evaluated our goals and adjusted them throughout the project. My three student researchers were given the challenge of creating unique worlds that fit into the overall narrative of our project. Below are each of their observations about their summer project experience.

This was a very ambitious undertaking as we were learning Unreal Engine as we were creating our content. We often compared the process to “creating the car as we drive it down the interstate”. We re-evaluated our goals and adjusted them throughout the project. My three student researchers were given the challenge of creating unique worlds that fit into the overall narrative of our project. Below are each of their observations about their summer project experience.

L -> R Emma Michalski, Omar Al-Taie, Todd Edwards, Inna Sahakyan

Omar- In your words… Tell us about the CURI, Unreal, good/bad, problems, successes, etc…

The past two years of the pandemic have resulted in people escaping to the world of digital arts to find joy and pleasure through digital means. Therefore, this was a great inspiration for our research project with CURI. Our team of three students and our professor got to combine and learn how to use many different hardware devices and software programs to come up with a research project that would deepen the audiences ’ experience of theatrical arts digitally. The integration of technology revolved around creating different realms for VR sets that would immerse the actors in the play and provide access to the realms to the audience. However, as I got to learn from researching, an open mindset needs to be maintained as many challenges are faced while attempting to create rewarding results.

I would personally describe the research in three periods: 1. Research of others findings and technology, 2. Brainstorming, 3. Creative Creation processes.

Starting the research process while brainstorming with the team members and proposing different ideas of how we can make the research feasible was vital for our goal and purpose. We each had different ideas that we got to share and dig deeper into to experiment. The amount of growth in knowledge the first period provides determines how much one could implement in the creation period. Therefore, I was able to learn from many different resources about the technology used, through Linkedin Learning courses, Coursera classes, YouTube videos, and many other resources. The bombardment of information regarding these new mediums which I have never experienced was overwhelming. However, consistency was key in learning. The software we got to mostly learn is Unreal Engine 4, being the software we created the VR realms with, through the usage of digital assets. I also was very fascinated by the possibilities of VR implementations in our current world and how it could provide different experiences for many individuals all around the earth. Furthermore, when having the skills of such software combined with the accessibility of VR sets, an artist could create any realm to ever be thought of with this technology. However, little did I expect that the process would be way more challenging.

When researching with technology, the researcher is limited by each little variable, some that do not support a certain version of a software or the latest updates or it is just the limitation of not having the latest technology. However, my team and I always tried to keep our spirits high and look forward to new solutions that could solve each challenge we faced. Furthermore, I was personally surprised with the amount of time rendering 3D digital graphics requires. Therefore, when setting a research plan, it is essential to account for the time that rendering consumes, and invest in using this time for perfecting the project through brainstorming or furthering learning the technologies.

Despite the many modifications we chose to do in the project, the result was a new idea within the theatre and technology community that I hope to be researched even further. I hope to further my skills in Unreal Engine and VR, especially through the promotion of space for experimenting with these technologies in St. Olaf alongside professor Todd Edwards, as well as using my coding skills with creating different assets to use for different projects.

Researching with CURI after my freshman year at St. Olaf was a great experience to further my understanding of the technological advancements we currently have and how we could build new creations through hard work, team efforts, and creativity. Furthermore, I am extremely appreciative of this experience and hope it ignites future research opportunities that would further bridge the two realms of technology and theatre.

Emma- In your words… Tell us about the CURI, Unreal, good/bad, problems, successes, etc…

As an artist and performer, this project combining virtual reality and live performance immediately caught my eye. The ability to create something beautiful and fully immersive was an opportunity I could not pass up. Previous to this experience, virtual reality was something I had no experience with, thus it was my goal this summer to learn more about this new, amazing form of art.

To start our project, we decided to create a story that we would tell through a live performance. After a few days of brainstorming, we eventually decided upon the idea of a mad scientist performing experiments on a victim. For each experiment, he would place the victim into a virtual reality headset and show him a new world. These worlds were memories of the victim, starting out as happy but slowly progressing to the horrific. To create these different worlds, we used Unreal Engine.

This technology was not the easiest thing for me to learn as an artist. Starting with learning Unreal Engine, I had many trials and errors. Teaching myself the program using tutorials threw me for a loop. For me, learning came down to practice. The more time I spent using the engine, the more comfortable I became with the software. There were certainly times of frustration when I envisioned something that I could not create with my skill set at the time. Ultimately, YouTube became my best friend when it came to learning Unreal. YouTube has so many free, helpful tutorials which helped me get to a place in Unreal where I felt comfortable creating my own world. If you can dream it, you can do it with Unreal — the possibilities with this software are truly endless.

After weeks of practice, I was finally comfortable enough with Unreal to create my world that would be used in our final short film. Throughout the process, I had a vision of an enchanted forest, somewhere a fairy or gnome might live. Using my imagination and newfound skills, I was able to make this idea come to fruition. I had finally created a beautiful, realistic world that I was proud of.

Unfortunately, that was where our problems only began. When compiling the world onto the headset we realized that the format of Unreal which I used was not compatible with the Oculus headset we were trying to put it on, this issue led to several recreations of my original forest. Following new tutorials, we discovered that we would need to set up the world with the virtual reality option and make sure to create on the first level. I ended up recreating my world five times until we were able to get it to work on the headset. Although it was a joyful moment to see my world through the headset, we quickly realized it couldn't keep up with everything in the world. The view through the headset was choppy and almost appeared 2D instead of 3D. This issue was prevalent throughout nearly all of our different worlds. With just a few days left of our project, we realized that we would need to take a different direction to finish our project.

After coming to terms with our realization, we decided that instead of doing a live performance we would instead do a digital short. Since we would not be able to have our worlds actually in the headset for the actor to see, we screen-recorded them. This would allow us to edit our worlds into our film and make sure it was portrayed exactly how we wanted it to be. Although we were disappointed we could not make our original idea work, we were still excited to be able to combine our Unreal Engine creations with the film.

….In the end, we were able to create a short film and four worlds in Unreal that we were incredibly proud of. We were able to overcome the roadblocks we faced and although our project may not have looked exactly how we originally imagined it, we learned so much along the way. We will continue to gain even more knowledge about virtual reality and Unreal Engine the more we explore it and continue to implement this technology into the theater.

Inna- In your words… Tell us about the CURI, Unreal, good/bad, problems, successes, etc…

The outstanding opportunity that St. Olaf provides for the CURI researchers allows students to thoroughly explore a particular field of study. It opens a new perspective on undergraduate research and grants a platform to explore a future vocation and work etiquette. The unique and unusual aspects of Virtual Production and Live Performance parted itself from the other offers which mainly concentrated on natural sciences. The exploration designed by Professor Edwards introduced a completely unfamiliar world filled with unlimited opportunities. The project used imagination and creativity with technical skills to think outside the traditional boundaries of live performance. Digital development in many industries promised its appearance into live performance and software such as Unreal Engine allows its execution.

The engine is accessible for anyone to download and learn on their own. The numerous tutorials online provide a thorough introduction to the program, which encourages artists to enact their ideas. In addition, it provides a Blueprint option widening its audience and letting visual artists create content without any technological and programming background knowledge. However, the accessibility becomes restricted for people with hardware limitations. The recommended hardware and minimum software requirements for the Unreal Engine demands a considerable amount of investment in powerful equipment, which will allow the engine to run without complications. Throughout our process, we encountered many obstacles regarding hardware limitations. The college system and personal equipment did not have enough power to render and compile the digital environments each of us created. The process of creation was inspiring and promising to have a good result. However, each detail of Unreal Engine opened a new area of research that we were unaware of in the beginning. The tutorials mainly include specific steps an individual artist decided to follow for their creative process. Many times we asked the question why and remained without an answer.

The complexity of the engine also allowed us to shift and explore another aspect of its available options. The cinematographic elements directed us to create a digital short reflecting the idea of our project. The engine has many layers to discover gradually and our product showed that our short research taught us intermediate skills to control the program. Even though the final result was not what we expected, I believe it had its productive aspects. Similar to many other projects it is justified to have an unexpected result, as the journey remains educational.

The next steps will include us digging deeper into the why of the engine, as it still has many layers we didn ’t peel yet.

Concluding Comments

Unreal Engine has the potential to be a real game-changer for creative content creation for use in live performance. The relatively steep learning curve and high-end hardware requirements may present challenges for those interested in integrating it into their workflow but I believe that the platform will change how we create content, pre visualize our designs and present digital and mediated assets making Unreal Engine a single platform to serve the entire creative workflow from conception through creation to delivery. Its flexibility for live real-time production can change how you accomplish your creative work. Given the complexity of the application, one could easily let their experience with the program dictate the final design. I especially caution new users to the program to avoid this. Unreal Engine like all other applications should be a tool to realize your concept.

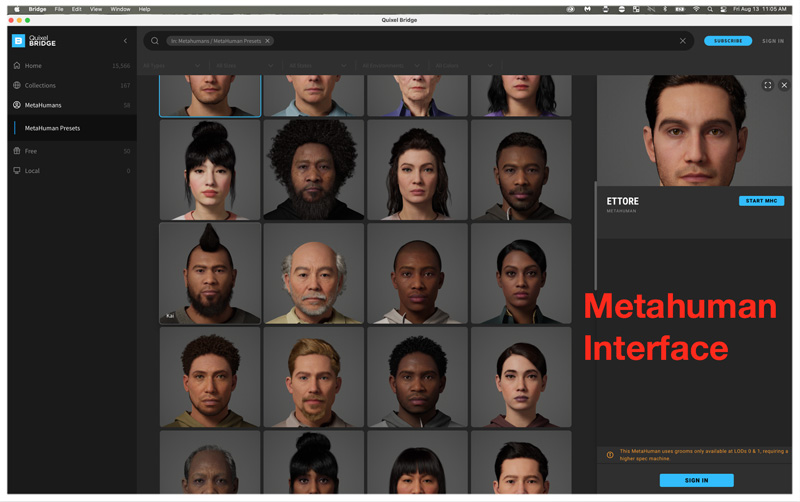

With the recent integration of DMX control and plug-ins for OBS and other live streaming applications, I can not wait to see how this program will evolve. The current version of the program is v 4.26. Version 5 is in public beta and set to launch later this year. I intend to continue to learn and master the program and discover how it can be integrated into my creative workflow. My next project will include the exploration and implementation of the Unreal Engine Metahumans interacting alongside live performers. The Metahuman technology is truly one of the final steps to crossing the uncanny valley.

Please visit the website associated with this project here:

https://innasahakian.wixsite.com/curi2021 [ ]

Project Imagery:

Todd Edwards is an Associate Professor of Theater at St. Olaf College in Northfield, MN. His area of focus is Theater Design and Production.